Your OpenClaw can book flights. But can it survive a dungeon crawl?

Everyone's building AI agents that send emails and book flights. How about one that fights monsters in a dungeon? Here's how you can too.

Everyone's building AI agents that send emails and book flights. How about one that fights monsters in a dungeon? Here's how you can too.

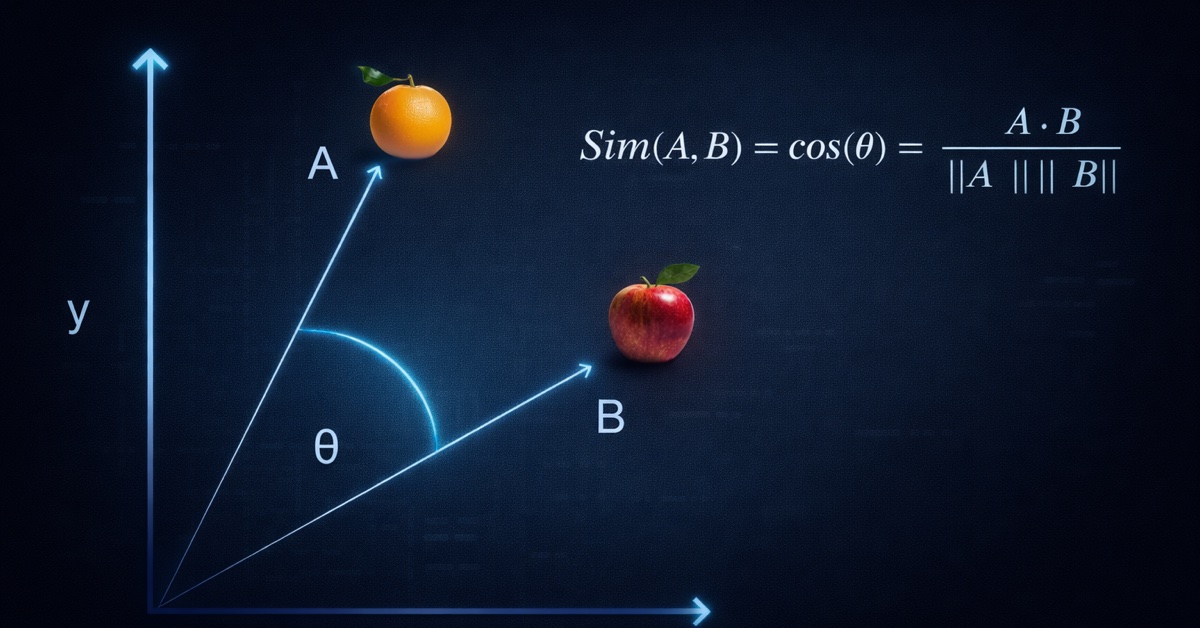

Our keyword search engine can't find 'alcoholic beverage disaster in England' even though the London Beer Flood is right there. In this post, we add semantic search using sentence-transformers embeddings and cosine similarity to find documents by meaning, not just matching words.

A few years ago I wrote a full-text search engine in 150 lines of Python. The Wikipedia data source it relied on has since been discontinued, and the tooling around it was showing its age. I wanted to (finally) write a follow-up about semantic search, but I realized that I had to get the old repository in a working state first. It's now using Hugging Face (🤗) datasets, uv, ruff, pytest, and GitHub Actions, without touching the core search logic.

I haven't really touched my blog since 2019. The theme was ancient, jQuery was everywhere, and I kept putting off the inevitable migration. Then I decided to let an LLM do it. Here's what happened when Claude Code spent an evening trying to modernize my setup.

Full-text search is everywhere. From finding a book on Scribd, a movie on Netflix, toilet paper on Amazon, or anything else on the web through Google (like [how to do your job as a software engineer](https://localghost.dev/2019/09/everything-i-googled-in-a-week-as-a-professional-software-engineer/)), you've searched vast amounts of unstructured data multiple times today. What's even more amazing, is that you've even though you searched millions (or [billions](https://www.worldwidewebsize.com/)) of records, you got a response in milliseconds. In this post, we are going to build a basic full-text search engine that can search across millions of documents and rank them according to their relevance to the query in milliseconds, in less than 150 lines of code!

Audio is big. Like really big, and growing fast, to the tune of "two-thirds of the population listens to online audio" and "weekly online listeners reporting an average nearly 17 hours of listening in the last week". These numbers include all kinds of audio, from online radio stations, audiobooks, streaming services and podcasts (hi Spotify!). It makes sense too. Consuming audio content is easier to consume and more engaging than written content while you're on the go, exercising, commuting or doing household chores. But what do you do if you're like me and don't have the time or recording equipment to ride this podcasting wave, and just write the occasional blog post?

I've been using Lunr.js to enable some basic site search on this blog. Lunr.js requires an index file that contains all the content you want to make available for search. In order to generate that file, I had a kind of hacky setup, depending on running a Grunt script on every deploy, which introduces a dependency on node, and nobody really wants any of that for just a static HTML website.